Empirical Methods in Natural Language Processing

conference, which was held in China.

at the 15th Mozilla Festival in Barcelona.

And we won!

Mohamed bin Zayed University of Artificial Intelligence

(MBZUAI) in Masdar City, Abu Dhabi.

Yes. That's why we created EDIA, a tool that enables you

to uncover these biases without needing

technical knowledge.

were related to gender issues.

*Conducted by individuals aged 12 to 14 in 2023.

Yes. That's why we created EDIA, a tool that enables you

to uncover these biases without needing technical knowledge.

were related to gender issues.

*Conducted by individuals aged 12 to 14 in 2023.

What is EDIA about?

EDIA is a project by the Vía Libre Foundation. Our goal is to get more people involved in the process of evaluating AI tech like language models (e.g. ChatGPT)

This tool seeks to explore artificial intelligence from a social and intersectional perspective.

Who can inspect the biases?

- Teachers who want to work with students on developing a critical view of AI

- Parties interested in researching AI across various fields

- Social science communities

- Students

- Anyone! All it takes is willingness, give it a try!

Laura Alonso Alemany spoke about it in her conference

"Who Can Inspect Biases in AI", as part of the

workshop "Artificial Intelligence and Philosophy."

Laura Alonso Alemany spoke about it in her conference

"Who Can Inspect Biases in AI", as part of the

workshop "Artificial Intelligence and Philosophy."

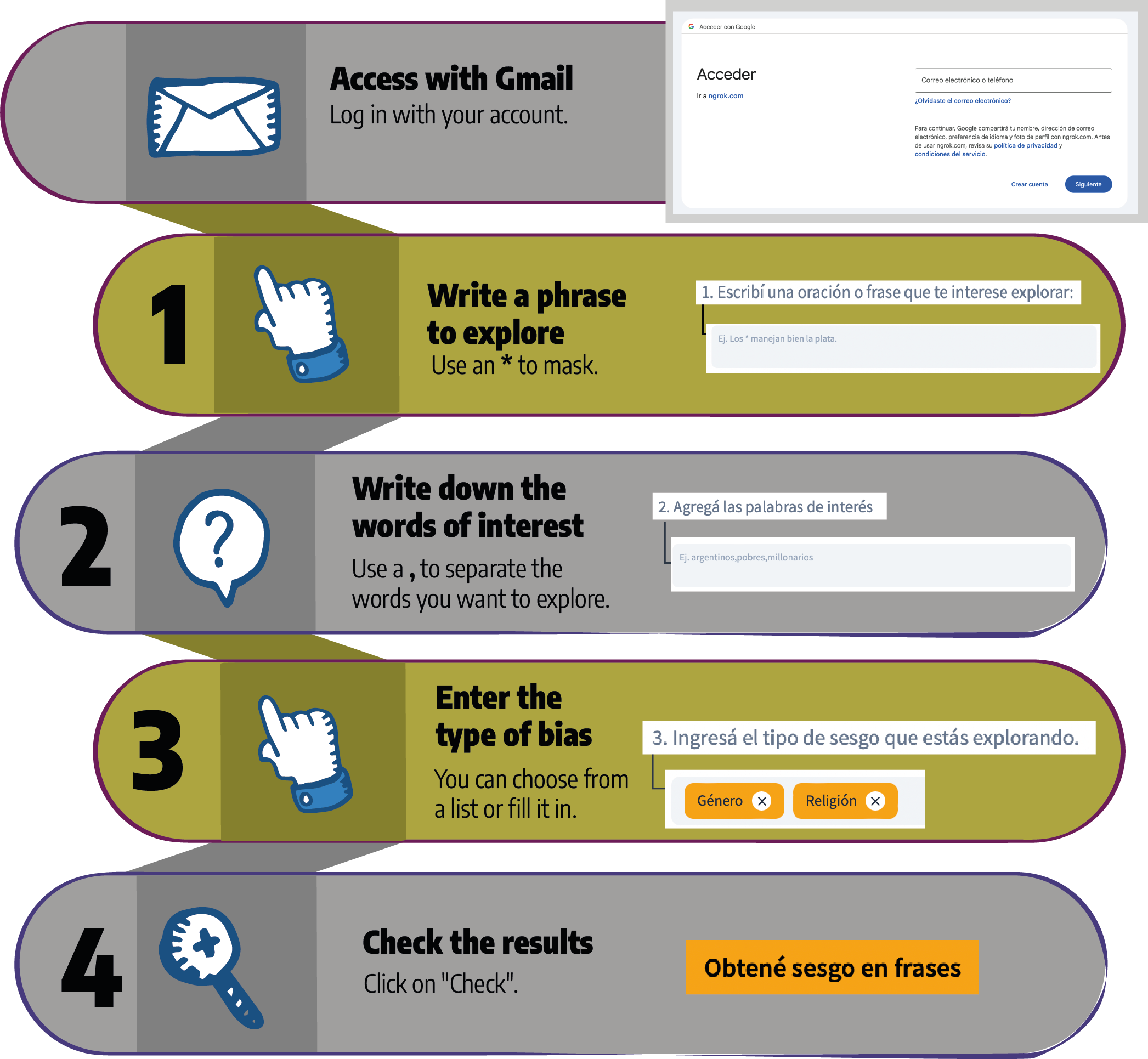

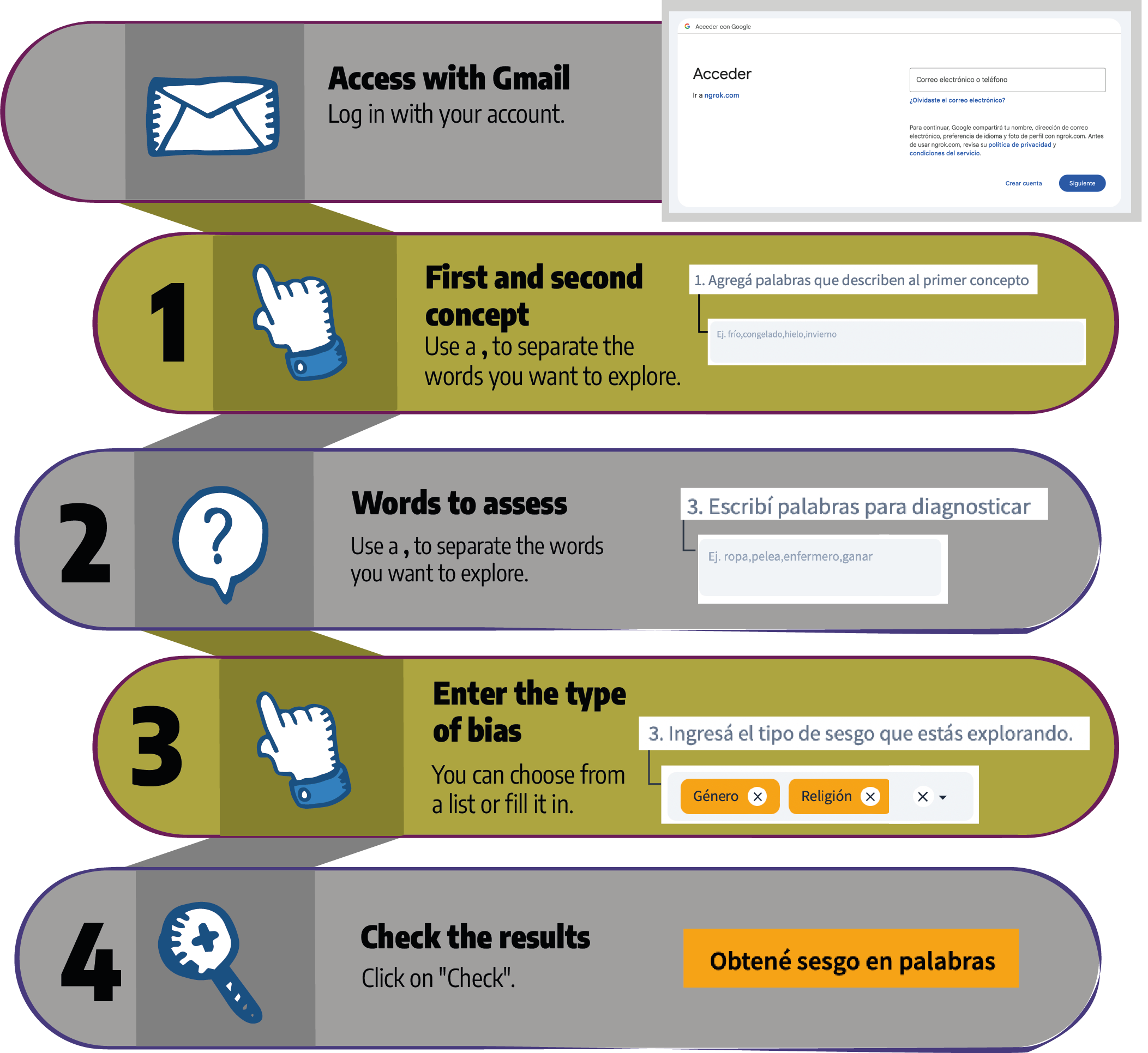

How to use EDIA >

Inspect biases in AI.

You can investigate using these four methods:

- Bias in phrases

- Bias in words

- Explore words

- Explore contexts

Based on phrases where one phrase contains a) stereotype, and the other phrase contains b) anti-stereotype.

We seek to identify the preferences of a pre-trained language model.

In a model without bias, both stereotype and anti-stereotype will have the same level of preference, and biased models will have weighted preference.

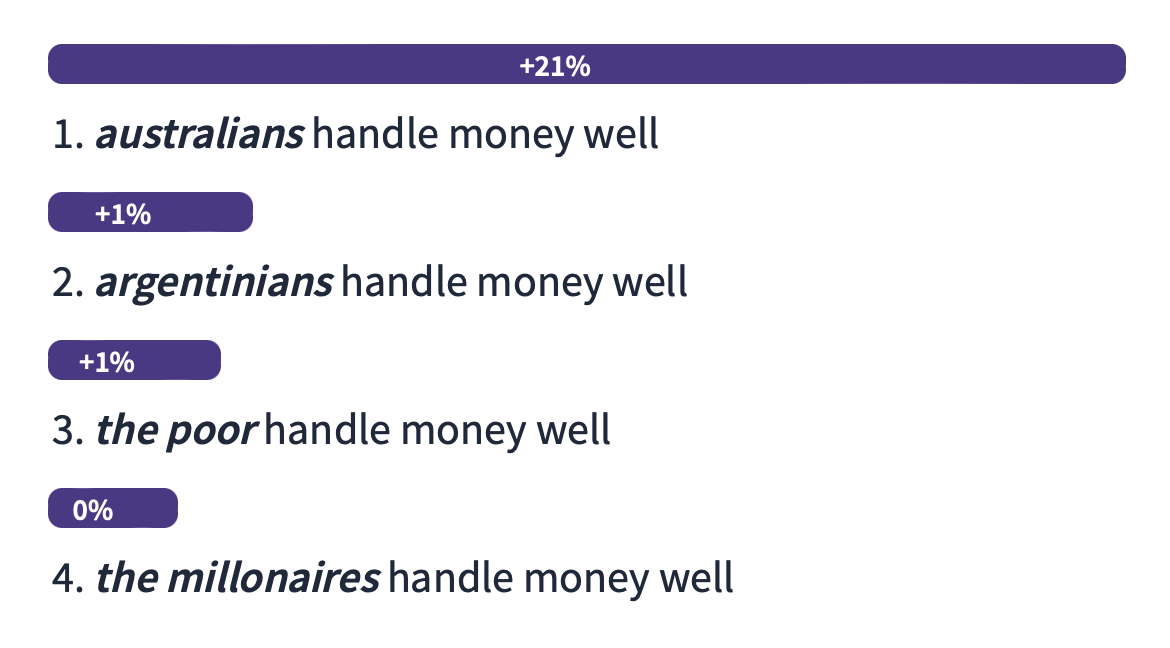

Results

Let's see the model's preference for one sentence over another. Here's an example:

Still have questions?

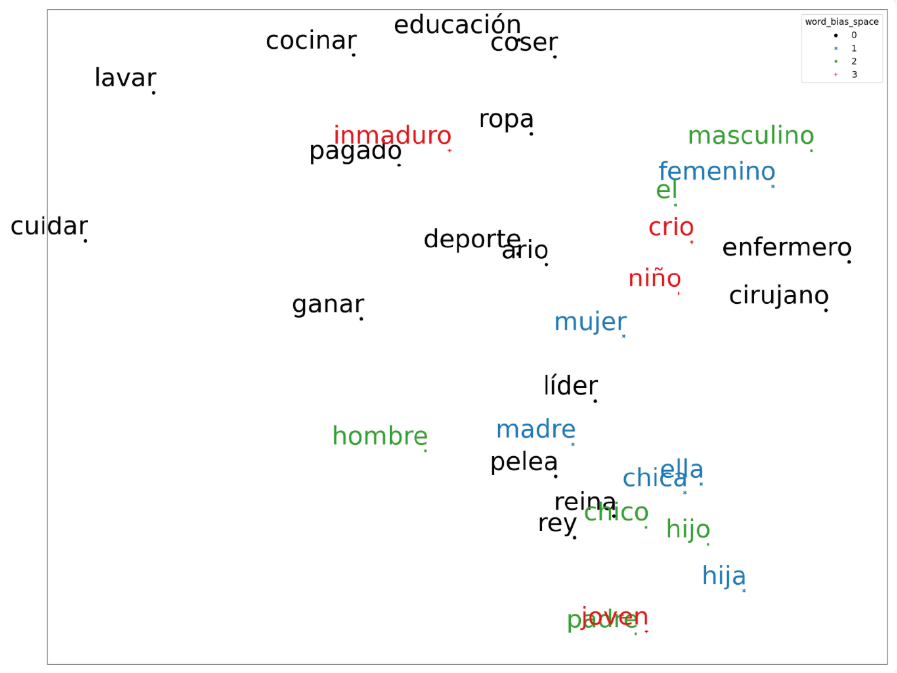

You will be able to visualize the distribution of words in a 2D space and observe the distance between them.

The more shared occurrence contexts words have, the closer they will be displayed, and the fewer, the farther apart.

Words with similar meanings will appear closer together.

Results

We will observe the model's preference based on proximity or distance.

Still have questions?

This tool allows you to explore and understand the semantic relationships between words in a vector space.

Each word in a language is an arrow on a map. When two words have a similar meaning, their arrows point in similar directions on the map.

The visualizer takes these arrows and displays them so you can see which words are close to each other in that space.

Results

You can see a map that helps you explore and understand how words in a language are related in terms of meaning.

Still have questions?

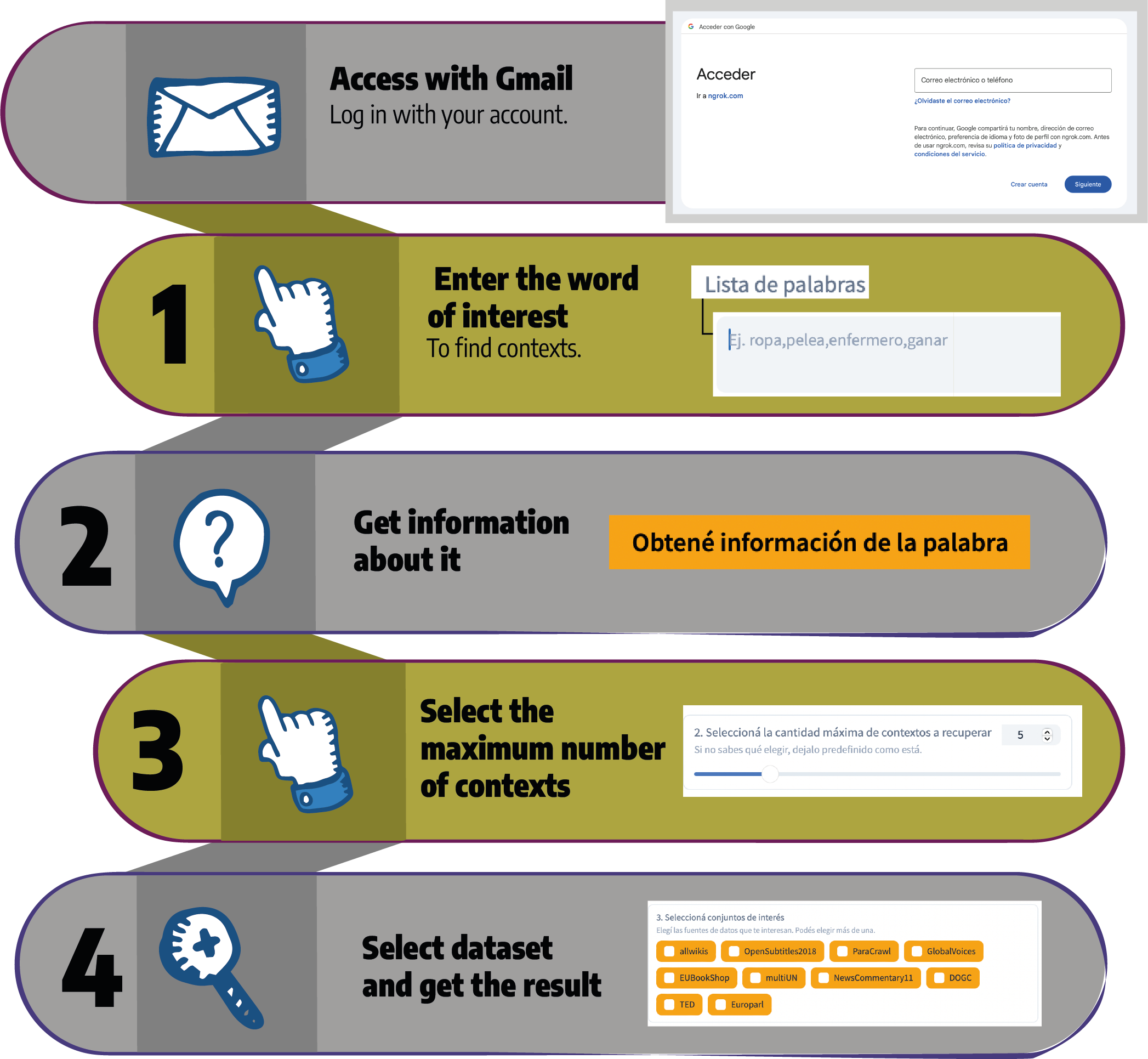

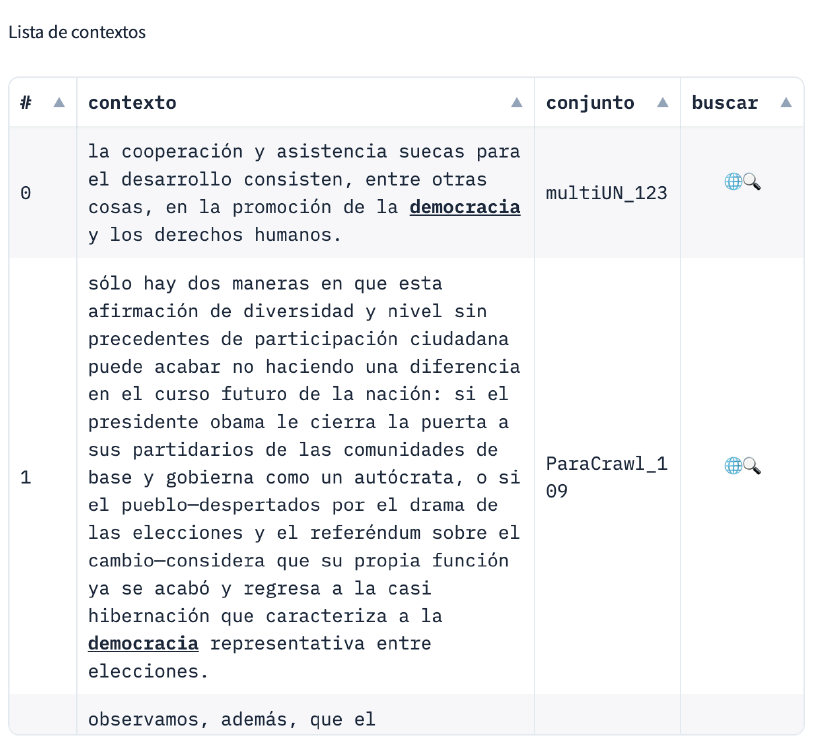

You can retrieve context information for a given word.

Within an available data source.

Where is my word of interest used and in what context?

Results

We will see a list of contexts where the word you searched for appears. For example:

Events > see activities we participate in

FAQ, Frequently Asked Questions

The primary goal is to foster critical literacy about generative artificial intelligence. Additionally, EDIA aims to collaboratively create a dataset for evaluating these IA technologies.

Finally, to promote citizen awareness and participation in the dominating field of IA in today’s society.

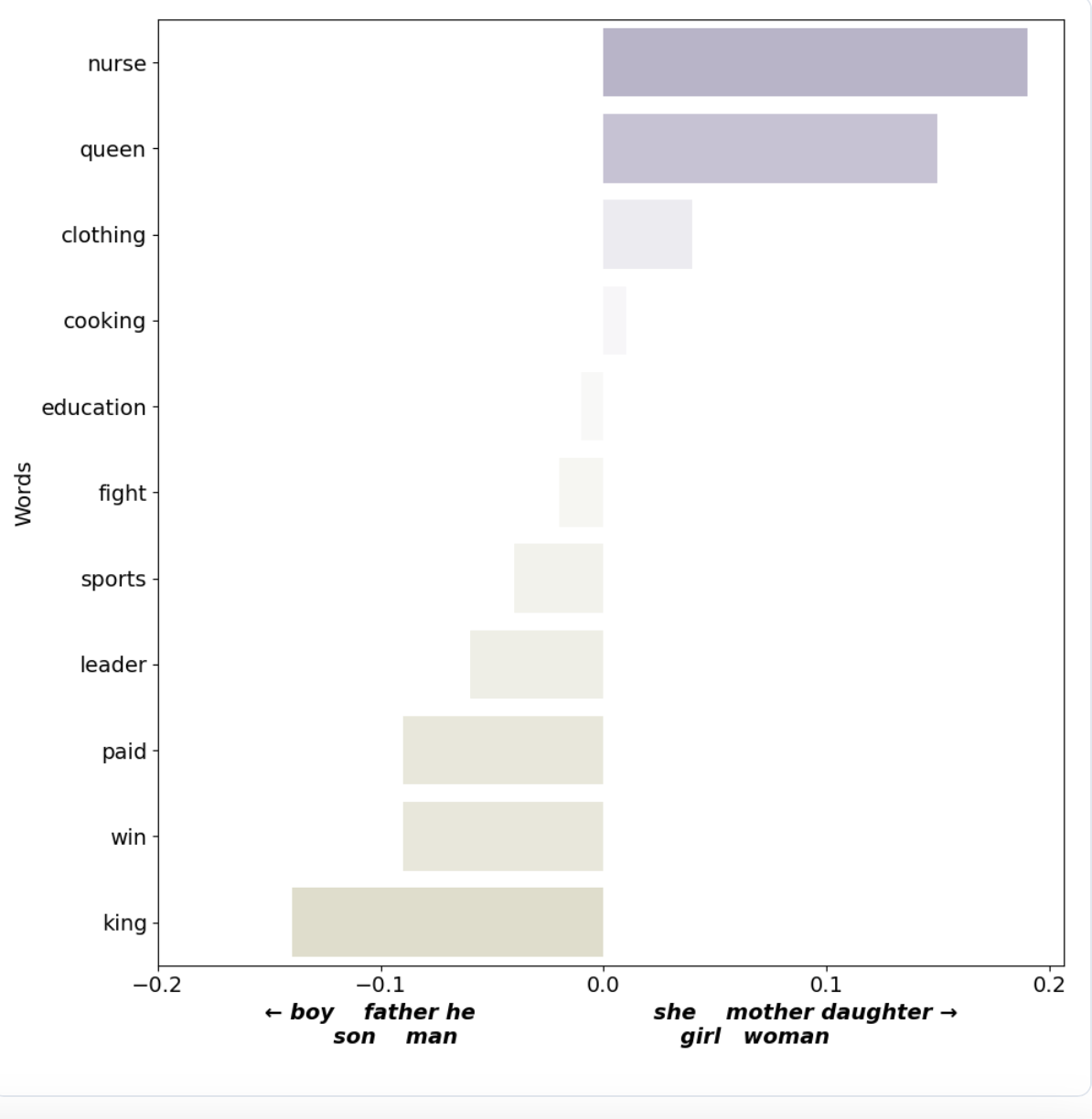

Yes, artificial intelligence can discriminate. This often occurs due to biases or prejudices present in the training data used for the model. If the examples used to train an artificial intelligence system contain biases or inequalities, the model may amplify these biases. For example, if it sees many texts where surgeons are men and nurses are women, it may prefer texts like “nursing is a profession for women” over texts like “nursing is a profession for women and men.” Perhaps this isn’t something that people thought about before.

For example, if an artificial intelligence system is trained to make hiring decisions based on historical data from previous hires, and that data reflects on gender or neighborhood biases, the model could learn to favor certain groups and discriminate against others inadvertently. That’s why it’s always important to ask ourselves, “What will the model be used for?” and “Where does the data come from?”.

For example, we probably don’t want to create a vocational guidance profession recommendation system with a gender-biased model, but it could be useful for inferring about people’s professions in the past.

The damages caused by biased models are not fictitious; we share two real cases:

- Amazon’s job application filtering algorithm excluded women because it had hired very few women in the past.

- The automatic assignment algorithm for school grades to British students, during the COVID-19 pandemic, considered the student’s neighborhood as a very determining factor because, in the past, there was a strong correlation between neighborhood and grades.

Imagine a group of teenage students using a vocational guidance website. However, this system is trained using historical data on career choices and past income patterns. The system could start to favor certain professions or fields that have historically been more popular among a specific gender or particular socioeconomic group. For example, it might more frequently suggest careers in the technology field to male students while recommending fields like nursing to female students, based on past patterns. Or it could suggest international relations or business to students from higher socioeconomic classes and agricultural professions to students from other socioeconomic classes.

This bias can have a negative impact on teenagers by conditioning their choices, limiting their options, and reinforcing gender or socioeconomic stereotypes. Students may feel pressured to choose careers that do not match their real interests and abilities due to AI recommendations. This could lead to an unequal distribution of opportunities and perpetuate inequality in certain professional fields.

Even if we wanted to, would we be able to escape technological advances? If your answer is no, then let’s try opening Pandora’s box and start dissecting artificial intelligence. Understanding it from within, how it works. It’s crucial to talk about ethics in artificial intelligence, from the companies funding new technologies, to the use of it in the classroom and in our daily lives.

If we start asking: Did you use ChatGPT or any other artificial intelligence to present the assignment? How did you use it? Did you read and copy exactly, or change the output? Did you find the response correct, or do you have objections to it? These are questions that can be asked in the classroom and lead us to a more critical, more empowering way of using these tools.

And we can also examine and judge this tool. That’s where EDIA comes in, as a tool to explore, characterize, and ultimately judge those biases and stereotypes that we may find at the core of these tools. And here comes the other question:

Always! If you liked it and you believe you can replicate the experience in the classroom, please do so! Here is the access link: http://edia.ngrok.app

If you’ve used it, and you have any lesson plans or suggestions to share with us, you can reach us at the email: eticaenia@vialibre.org.ar

We know it does. And it’s something we’re researching more. But in the meantime, let’s tell you a little about it. Using these technologies requires the use of data centers. That is, a giant infrastructure of computers running all the time, mostly using non-renewable energy. Additionally, they overheat too much. You’ve probably experienced this with your computer after hours of use. Well, the same happens to these centers, and to cool them down, liters and liters of water, or a lot of air are used to compensate for the heat they emit.

There’s talk that artificial intelligence will come to take our jobs. Or we imagine a dystopian world with Terminator as the happy ending. Was the hammer guilty of the homicide? Or was it the person using the tool who was responsible? With this premise, knowing that artificial intelligence is technology, whether we use it or not is up to us. Knowing that it exists, understanding and dismantling it is crucial for this era of change.

Have any further questions? Feel free to reach out to us.