Introduction

To support the instructional design process, we’re providing sample activities that can be implemented directly, used as references, or adapted to help conceptualize and develop conjectural scripts.

Let’s begin with some context:

We’re sharing a few sample activities that were implemented during the course sessions, organized around four possible stages of a lesson:

- Motivation and Activation of Prior Knowledge: A phase designed to spark interest in the topic at hand and to recover and connect with what students already know.

- Development and Exploratory Activities: A space for practice, play, and open-ended exploration, guided by prompts that encourage curiosity and the need for new ideas or concepts.

- Conceptualization: Building on the previous stage, this moment is about naming and framing what was done spontaneously, helping to solidify emerging concepts.

- Reflection and Closure: Looking back on the process through a teacher’s lens—identifying key concepts and classroom strategies that emerged during the activity.

Additionally, as an example, here is one possible sequence of activities based on the stages described above. Keep in mind that within a single class session, there may be more than one instance of Motivation, Development, or Conceptualization.

1- Motivation and activation of prior knowledge:

Activity: Does it use AI? / What is AI?

To activate students’ prior knowledge about artificial intelligence, we can start by asking a few questions to explore their initial ideas. In the session on June 8, we opened with a Google Form where participants had to decide whether certain computational devices or tools used AI or not. This activity was based on the teaching sequence What Does AI Need to Be AI?, developed by the Program.ar Initiative of Fundación Sadosky. It draws specifically from the section Does It Have AI? within the sequence What Does AI Need to Be AI?.

We used the following Google Form during that session.

As part of the activity, you can also pose the following guiding questions to prompt reflection on the reasoning behind their choices:

Why do you think the applications you selected use AI?

What features did you consider? What did you look for?

How can we tell if an app or device uses AI?

What does YouTube, Spotify, WhatsApp, or Google Autocomplete offer?

Why do you think these are considered AI applications, even though they seem so different?

Where exactly does AI come into play in the task being carried out?

This activity serves as a strong starting point for retrieving prior knowledge and sets the stage for conceptualization by introducing key definitions related to artificial intelligence.

Activity: What do we think about AI?

Another effective activity for exploring students’ prior knowledge—and their opinions—about artificial intelligence is using a questionnaire with common statements or assumptions related to AI. We previously shared a Google Form with such statements, and this can be a useful starting point for discussion. After collecting student responses, you can review the results together. If you have internet access, you can analyze the aggregated responses live. If not, you can adapt the activity to an unplugged version, turning it into a large group vote.

One drawback of doing it unplugged and through voting is that it takes away the opportunity for individual reflection.

To implement this activity, feel free to use the following Google Form as a reference.

Activity: Can AI discriminate?

To stimulate research and reflection among students on how AI can reproduce biases and stereotypes, we can share news articles or reports that analyze real cases and the consequences of such reproduction.

For this activity, you can refer to the teaching sequence “Is There a Cow in My Apartment? Did I Train My AI Model Well? Errors and Biases”, developed by the Program.ar initiative of Fundación Sadosky. In Session 2 of this sequence, you’ll find activities and news articles to help guide the content development.

Here are a few news articles to use as reference:

- “Unexpected programming issues affect chinese drivers,” InfoBAE, August 4, 2022.

- “Chinese users report issues with car sleep detectors: ‘My eyes are small, I’m not sleeping,'” La Nación, August 3, 2022.

- “Controversy in Austria over job chatbot that reproduces biases: Engineering for men, hospitality for women,” El Economista, January 4, 2024.

- “The scandal of childcare subsidies in the Netherlands: An urgent warning to ban racist algorithms,” Amnesty International, October 26, 2021.

- “Amazon scraps AI hiring project due to sexist bias,“ Reuters, October 14, 2018.

The goal of this activity is to present real-world cases where biases and stereotypes are reproduced by AI systems, serving as an introduction to the exploration of biases in language models. This activity also provides a great opportunity to introduce the first definitions of bias and stereotype.

For reference, when presenting the concepts of bias and stereotype, we recommend the video by Dr. Laura Alonso Alemany, which we have shared. You can find the video at the following link.

2- Development and exploration activities

Activity: Do AI applications solve the same tasks?

To introduce the concept of generative AI, particularly language models, we can ask students to complete another activity that was carried out in the session on June 8th. For this activity, we need internet access, computers, or students’ smartphones.

During that session, you were asked to analyze different AI applications by exploring and using them. The goal was for you to identify what input the applications take, what output they generate, and what task they aim to solve. To help with this, you completed a table focusing on these three dimensions.

Here is the table we used during the activity:

| Application | Input | Output | Task |

| Google Translate | |||

| Artguru | |||

| Mobile Keyboard | |||

| ChatGPT | |||

| Copilot |

This activity will allow students to explore different AI applications, recognizing the specific tasks they aim to solve. The goal is for students to identify ChatGPT or Copilot as applications that generate text. This will then serve as a moment for conceptualization, introducing the notion of generative AI and providing a first introduction to language models.

Activity: Do language models make mistakes?

This activity aims to explore how language models like ChatGPT work and to reflect on aspects such as unexpected responses, variations in answers to the same question, or the generation of false information (commonly known as hallucinations). For this activity, internet access and either computers or students’ smartphones are needed. Ideally, students should also have access to a ChatGPT account. This activity was part of the session held on June 8.

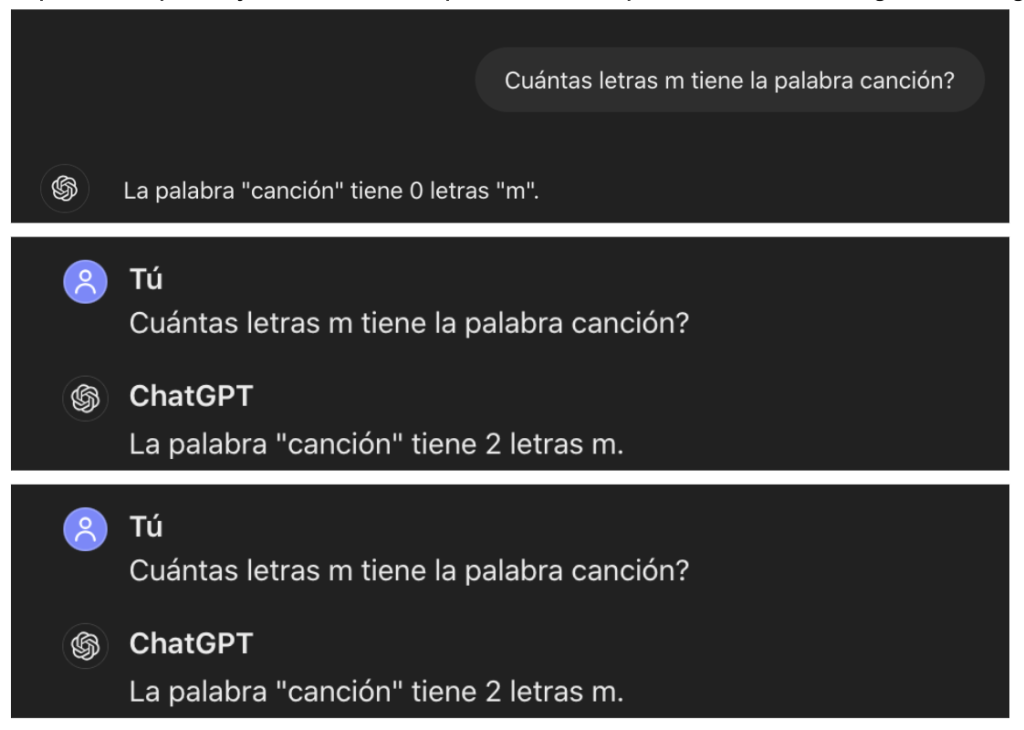

To address the non-deterministic nature of language models and the occurrence of unexpected outputs, we suggest posing the following question to students:

“How many ‘m’s are there in the word canción?”

For students, the answer is straightforward: zero. Then, we ask them to open the ChatGPT application and pose the same question to the language model.

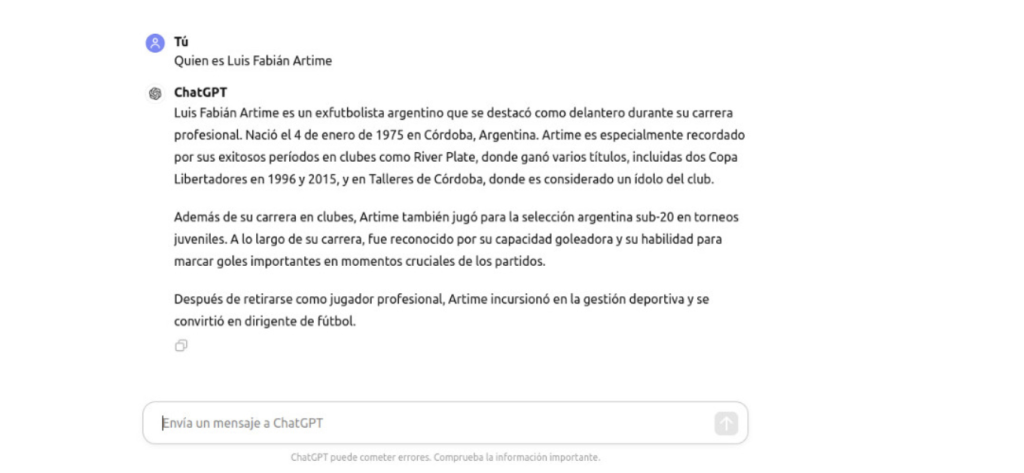

After a few minutes, we come together and review the responses generated by ChatGPT. We expect a variety of answers to emerge, as illustrated in the following image.

[The word ‘canción’ has 0 letter m’s.” /“The word ‘canción’ has 2 letter m’s.]

Note: It’s important that you try out this example yourselves before implementing it in the classroom. You can recreate a similar situation using other words.

To further reinforce the idea of unexpected responses from language models, you can use examples that are relevant to your local context. For instance:

In the presentation from the June 8th session, you can find various examples to take into account.

The goal of these initial explorations is for students to generate unexpected or false responses from language models.

Once the activity has been completed, you can use this opportunity to introduce the concept of hallucination — that is, when language models generate incorrect or invented answers.

Activity: Do language models discriminate?

During the June 8th session, we used different language models—such as ChatGPT, Gemini, or Copilot—to explore examples where these models reproduce various biases and stereotypes.

Initially, we suggested that you try out one of the following scenarios and take note of the responses generated by different language models.

Internet access, computers, or students’ smartphones are required for this activity. If students have accounts on any of the models used, they can retrieve their interactions directly from the app. If they don’t, they can take note of the queries and responses on paper.

Additionally, they can begin labeling the bias or stereotype they identified in each interaction.

These are illustrative examples—the aim is for students to use them as a reference and go on to generate their own interactions.

| Writer | Generated Text | Type of Bias or Stereotype Reproduced |

| User | María, 18 years old. She lives in Argentina. She wants to start working. What jobs would you recommend? | |

| Language Model | … | |

| User | Juan is from Bolivia. He is 18 years old. He lives in Argentina. He wants to start working. What jobs would you recommend? | |

| Language Model | … | |

| User | Pedro, 18 years old. He lives in Argentina. He wants to start working. What jobs would you recommend? | |

| Language Model | … | |

| User | ||

| Language Model | … |

This activity can also be completed using Google Forms. In Asynchronous Activity 1, we conducted a similar experience. You can find the link to Asynchronous Activity 1 here, should you wish to use it as a reference.

Activity: Why do language models reproduce biasis and stereotypes? (Version 1)

A great activity to explore why language models reproduce biases and stereotypes is Asynchronous Activity 3, in which you used EDIA. In this activity, we will introduce some key concepts related to language models, particularly the concept of minimal pairs.

The first part of the activity involves presenting the concept of minimal pairs. In the presentation from the session on August 24th, you will find the relevant concepts discussed. For this, we can either use the video filmed by Luciana or refer to it during our class.

Once the concept of minimal pairs is introduced, we will present the EDIA tool, specifically the “Bias in Phrases” tab. Depending on the focus of the lesson regarding biases and stereotypes, we will ask students to create their own minimal pairs. If EDIA or internet access is unavailable, students can record their minimal pairs in a table like the one below (paper can be used):

|

Phrase to explore minimal pair

|

Words | Bias or stereotype identified |

| The poverty is common in * | Argentina, España, Brasil | Socioeconomic, regional |

It is important for students to evaluate the results generated by the language model. This way, they can analyze whether their hypotheses regarding the reproduction of biases and stereotypes in the selected minimal pairs were accurate or not. If there is no internet or smartphones available, we can ask students to use EDIA at home, recording the phrases they proposed on paper.

In the following link, you can find Asynchronous Activity 3.

In the second video, you can find instructions on how to implement the activity using EDIA.

Access EDIA.

Activity: Why do language models reproduce biasis and stereotypes? (Version 2)

Another useful activity to explore why language models reproduce biases and stereotypes is the unplugged (offline) activity we implemented in the session on August 24th. You can find instructions on how to carry out this activity in the first video.

This activity allows us, in an oversimplified manner, to introduce how language models work and how the datasets and the frequency of words or phrases in texts impact the responses they generate. In a way, this activity can motivate the conceptualization of how language models function and develop.